Squeezing Django performance for 14.9 million users on WhatsApp

This is a blog version of a talk I gave at DjangoCon Europe 2023, which took place in Edinburgh.

It was the start of the pandemic, lockdowns were in place, and misinformation was spreading. In South Africa, a lot of this misinformation was being spread through WhatsApp. In a partnership with Turn.io, we created a WhatsApp service for the South African National Department of Health (NDoH), with the goal of providing up-to-date evidence-based information.

From a technology perspective, it started off as a simple service: we matched user inputs to a set of keywords, which would give different responses. Through some clever UX design, we could create a service where users could browse through a menu system and find the information that they want. We went with this approach as we knew it would be quick to get up and running, and able to easily handle large traffic volumes.

Once we were happy with the way this was running, it was time to move on to creating some more technologically advanced features. To be able to solve the kinds of problems we were seeing that could not be solved with the existing approach. The first one of these was a feature that we named HealthCheck.

Initially, COVID-19 tests were not widely available, so there were guidelines in place to determine who should be tested. These guidelines were confusing and changed often. We created a feature that would ask a set of questions relating to symptoms. We, together with the National Institute for Communicable Diseases (NICD), developed a risk-classification algorithm that would take these answers, and give advice on whether they should go get tested, quarantine at home, or take no action.

Furthermore, we also wanted these responses to feed into the NDoH's datalake, to help them detect hotspots and direct resources to where they are most needed. This feature is a lot more complex than what could be achieved with a keyword based system, and we required a place to store and surface these responses for the NDoH's team to ingest. So of course… Django to the rescue!

So we used Django as our web framework, PostgreSQL as our database, and Django Rest Framework (DRF) to help us build our API quickly and consistently. This is a stack that we're very confident running, and so in no time we were up and running, storing the results for the HealthChecks, and surfacing that data through the API.

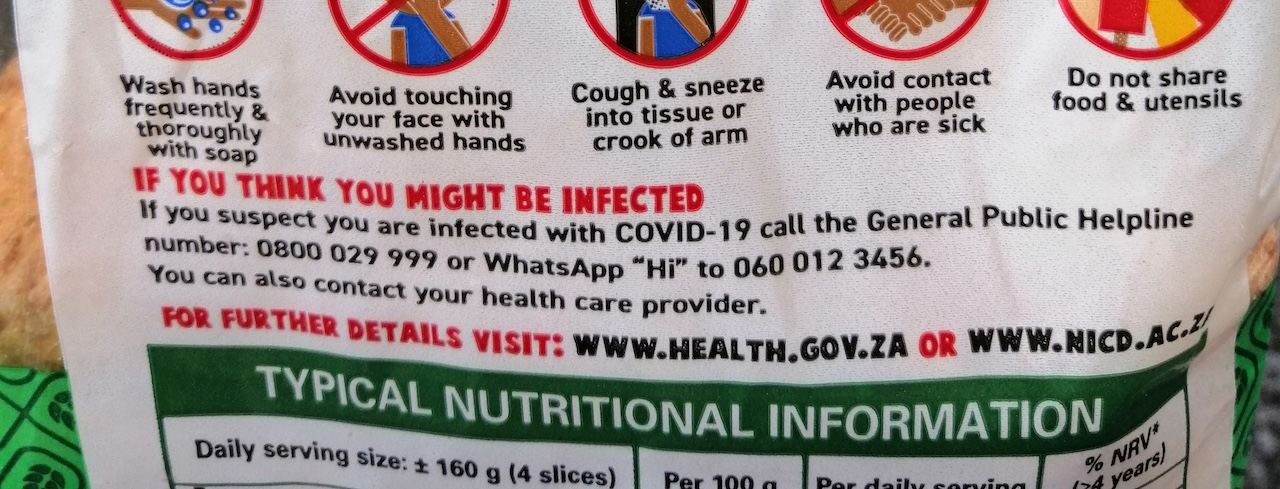

Now this service really started taking off, and very soon we were storing 10s of millions of completed HealthChecks. I even saw the service being promoted on the packaging of a loaf of bread that I bought.

We were using DRF's cursor pagination, so our API had no issues storing and surfacing that data in multiple pages, but our Django Admin page had become unusable. We had made one of the common mistakes when dealing with large tables and PostgreSQL (or most SQL databases).

When dealing with large tables, you should never do a count(*) over the whole table, there's even a whole page in the PostgreSQL documentation about it. Looking into our Django Admin page, it turns out that we were performing a count(*) twice! But these pages are automatically generated by Django, so how can we change this?

The first one is fairly simple. When filtering the results on the Django Admin page, text appears on the page similar to 99 results (103 total). The number in the brackets is the result of a count(*). This query seems to be run regardless of whether the results have been filtered. There is a configuration value that you can set to disable the count, which changes the text to (Show all).

The second count is more complex to get rid of. This is related to the pagination that Django Admin uses. Instead of using cursor pagination like we do with the API, Django Admin uses page-based pagination. This requires us to know the number of pages, so that we can display all the pages and allow the user to jump straight to any page. Unlike cursor pagination, where you can only go forward or backwards one page at a time. In order to provide this, we need to count the number of records, so that we know how many pages we want to split the records between.

Unfortunately we can't change the type of pagination used in Django Admin, but we can create a custom pagination class, where we can customise some aspects of the page-based pagination, specifically how we count the total number of records.

The second key to the puzzle is the pg_class table in PostgreSQL. In this table, there's a reltuples row, which contains an estimate of the number of active rows in the table, which is used by the query planner. We can also use this estimate for our Django admin, as for our use case we don't really need an accurate page count. We usually just want to view the top few pages of latest records, or filter or search on indexed columns.

The final piece is the statement_timeout client configuration. This allows us to set a time limit for a query. Using this, we can try to do a full count, which should complete in a short time for the smaller tables where we want accurate counts, but then for larger tables we can fall back to the estimate.

Putting this all together, we can replace the default count function in our custom pagination class with:

class ApproximatePaginator(Paginator):

"""

Paginator that returns an approximate count if doing the real count takes too long

A mix between:

https://hakibenita.com/optimizing-the-django-admin-paginator

https://wiki.postgresql.org/wiki/Count_estimate

"""

@cached_property

def count(self):

cursor = connection.cursor()

with transaction.atomic(), connection.cursor() as cursor:

cursor.execute("SET LOCAL statement_timeout TO 50")

try:

return super().count

except OperationalError:

pass

with connection.cursor() as cursor:

cursor.execute(

"SELECT reltuples FROM pg_class WHERE relname = %s",

[self.object_list.query.model._meta.db_table],

)

return int(cursor.fetchone()[0])

Now you may be wondering, how did we know that there are two count(*)s in our Django Admin page, and figure out what was causing them in order to fix them? This next section is going to deal with how we measure performance, find bottlenecks, and then fix them.

You should always measure your performance before making any changes, to be able to know the effect that your changes are having to your performance.

When measuring performance, it's best to do it with a full database. This will avoid issues that only come up when there's lots of data that needs to be processed. Often everything will work well in testing, and in your QA environment, but then have terrible performance once running in production. By ensuring you're measuring performance with a full database, you can avoid a lot of these issues.

For some services, it's as easy as downloading a dump of the production database, and then running your performance testing using that. For other services, that data is too sensitive, and you cannot use it for performance testing, so you have to generate new, fake data, that is as close as possible to the production data.

For this, we used Faker. This allowed us to generate a lot of data that is close to our production data. An example of how this data is generated:

def generate_vaccine_registration():

data = {}

data["gender"] = faker.random_element(("Male", "Female", "Other"))

if data["gender"] == "Male":

data["firstName"] = faker.first_name_male()

data["surname"] = faker.last_name_male()

if data["gender"] == "Female":

data["firstName"] = faker.first_name_female()

data["surname"] = faker.last_name_female()

if data["gender"] == "Other":

data["firstName"] = faker.first_name_nonbinary()

data["surname"] = faker.last_name_nonbinary()

data["dateOfBirth"] = faker.date_between("-100y", "-60y").isoformat()

data["mobileNumber"] = faker.numerify("2782#######")

...

Next, we need a way of faking users interacting with our system. For this we used Locust. Locust allows us to define the user journeys in code, and then run various realistic load testing scenarios. An example of defining the user journeys:

@task

def vaccine_registration(self):

registration = generate_vaccine_registration()

msisdn = registration["mobileNumber"]

dob = date.fromisoformat(registration["dateOfBirth"])

self.start_session(msisdn)

# menu

self.send_message(msisdn, "1")

# age gate

self.send_message(msisdn, "1")

# terms

self.send_message(msisdn, "1")

self.send_message(msisdn, "1")

Now that we can generate fake data, and fake user traffic, we can now figure out which user scenarios/endpoints are too slow. But how do we figure out why those endpoints are slow?

One of the tools that we use is Django Debug Toolbar. This is a useful tool to see why a specific Django page is slow, and is what we used to figure out the two count(*)s that were causing the issues on our Django Admin page.

If your issue is due to a slow SQL query, we can use either connection.queries or the Django Debug toolbar to get the SQL query that is causing issues. In our case it was fairly straightforward to figure out the issue with the query, but for more complex queries, if you prepend EXPLAIN ANALYSE to the query, and run it. It gives an output that is very useful for finding out why the query is slow, and helps to figure out any useful indexes.

Another common issue with Django (and most ORMs), is that it's very easy to run into the N + 1 query issue. For this, we can use the assertNumQueries assertion in our unit tests. This ensures that the number of queries does not change. This can help us with N + 1 query issues, as well as for critical endpoints, to ensure that code changes do not introduce additional queries, which could affect performance. If they do, this gives us a failing test that prompts us to properly review this extra query and ensure it does not impact performance.

One other way to improve performance is to look at switching your interpreter from CPython to PyPy. It is very compatible with most Python code, and for a lot of use cases, can give you better performance.

In your PostgreSQL configuration, there's a log_min_duration_statement configuration parameter. This will log any SQL statements that take longer than the configured value. By enabling this and checking the logs periodically, this can help you find any issues in production resulting from slow SQL queries, that you might not have been aware of.

And then lastly, a shoutout to pythonspeed.com, which has a lot of great articles on improving performance with python. Specifically, there's a great article on configuring gunicorn for docker, which can be summarised into these configuration options:

--workers=2

--threads=4

--worker-class=gthread

--worker-tmp-dir=/dev/shm